Ban 'killer robots' to protect fundamental moral and legal principles

- Written by Bonnie Docherty, Lecturer on Law and Associate Director of Armed Conflict and Civilian Protection, International Human Rights Clinic, Harvard Law School, Harvard University

When drafting a treaty on the laws of war[1] at the end of the 19th century, diplomats could not foresee the future of weapons development. But they did adopt a legal and moral standard for judging new technology not covered by existing treaty language.

This standard, known as the Martens Clause[2], has survived generations of international humanitarian law and gained renewed relevance in a world where autonomous weapons are on the brink of making their own determinations about whom to shoot and when. The Martens Clause calls on countries not to use weapons that depart “from the principles of humanity and from the dictates of public conscience.”

I was the lead author of a new report[3] by Human Rights Watch[4] and the Harvard Law School International Human Rights Clinic[5] that explains why fully autonomous weapons would run counter to the principles of humanity and the dictates of public conscience. We found that to comply with the Martens Clause, countries should adopt a treaty banning the development, production and use of these weapons[6].

Representatives of more than 70 nations will gather from August 27 to 31 at the United Nations in Geneva to debate how to address the problems with what they call lethal autonomous weapon systems. These countries, which are parties to the Convention on Conventional Weapons[7], have discussed the issue for five years. My co-authors and I believe it is time they took action and agreed to start negotiating a ban next year.

Making rules for the unknowable

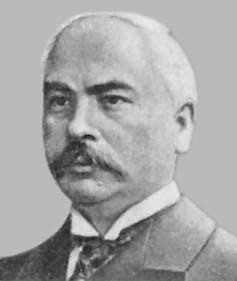

Russian diplomat Fyodor Fyodorovich Martens, for whom the Martens Clause is named.

Wikimedia Commons[8]

Russian diplomat Fyodor Fyodorovich Martens, for whom the Martens Clause is named.

Wikimedia Commons[8]

The Martens Clause provides a baseline of protection for civilians and soldiers in the absence of specific treaty law. The clause also sets out a standard for evaluating new situations and technologies that were not previously envisioned.

Fully autonomous weapons, sometimes called “killer robots,” would select and engage targets without meaningful human control. They would be a dangerous step beyond current armed drones because there would be no human in the loop to determine when to fire and at what target. Although fully autonomous weapons do not yet exist, China, Israel, Russia, South Korea, the United Kingdom and the United States are all working to develop[9] them. They argue that the technology would process information faster and keep soldiers off the battlefield.

The possibility that fully autonomous weapons could soon become a reality makes it imperative for those and other countries to apply the Martens Clause and assess whether the technology would offend basic humanity and the public conscience. Our analysis finds that fully autonomous weapons would fail the test on both counts.

Principles of humanity

The history of the Martens Clause shows that it is a fundamental principle of international humanitarian law. Originating in the 1899 Hague Convention[10], versions of it appear in all four Geneva Conventions[11] and Additional Protocol I[12]. It is cited in numerous[13] disarmament[14] treaties[15]. In 1995, concerns under the Martens Clause motivated countries to adopt a preemptive ban on blinding lasers[16].

The principles of humanity require humane treatment of others and respect for human life and dignity. Fully autonomous weapons could not meet these requirements because they would be unable to feel compassion, an emotion that inspires people to minimize suffering and death. The weapons would also lack the legal and ethical judgment necessary to ensure that they protect civilians in complex and unpredictable conflict situations.

Under human supervision – for now.

Pfc. Rhita Daniel, U.S. Marine Corps[17]

Under human supervision – for now.

Pfc. Rhita Daniel, U.S. Marine Corps[17]

In addition, as inanimate machines, these weapons could not truly understand the value of an individual life or the significance of its loss. Their algorithms would translate human lives into numerical values. By making lethal decisions based on such algorithms, they would reduce their human targets – whether civilians or soldiers – to objects, undermining their human dignity.

Dictates of public conscience

The growing opposition to fully autonomous weapons shows that they also conflict with the dictates of public conscience. Governments, experts and the general public have all objected, often on moral grounds, to the possibility of losing human control over the use of force.

To date, 26 countries[18] have expressly supported a ban, including China. Most countries[19] that have spoken at the U.N. meetings on conventional weapons have called for maintaining some form of meaningful human control over the use of force. Requiring such control is effectively the same as banning weapons that operate without a person who decides when to kill.

Thousands of scientists and artificial intelligence experts[20] have endorsed a prohibition and demanded action from the United Nations. In July 2018, they issued a pledge not to assist[21] with the development or use of fully autonomous weapons. Major corporations[22] have also called for the prohibition.

A robot forms part of a protest against ‘killer robots’ in front of the Houses of Parliament and Westminster Abbey in London.

Luke MacGregor/Reuters[23]

A robot forms part of a protest against ‘killer robots’ in front of the Houses of Parliament and Westminster Abbey in London.

Luke MacGregor/Reuters[23]

More than 160 faith leaders[24] and more than 20 Nobel Peace Prize laureates[25] have similarly condemned the technology and backed a ban. Several international[26] and national[27] public opinion polls have found that a majority of people who responded opposed developing and using fully autonomous weapons.

The Campaign to Stop Killer Robots[28], a coalition of 75 nongovernmental organizations from 42 countries, has led opposition by nongovernmental groups. Human Rights Watch, for which I work, co-founded and coordinates the campaign.

Other problems with killer robots

Fully autonomous weapons would threaten more[29] than humanity and the public conscience. They would likely violate other key rules of international law. Their use would create a gap in accountability because no one could be held individually liable for the unforeseeable actions of an autonomous robot.

Furthermore, the existence of killer robots would spark widespread proliferation and an arms race – dangerous developments made worse by the fact that fully autonomous weapons would be vulnerable to hacking or technological failures.

Bolstering the case for a ban, our Martens Clause assessment highlights in particular how delegating life-and-death decisions to machines would violate core human values. Our report finds that there should always be meaningful human control over the use of force. We urge countries at this U.N. meeting to work toward a new treaty that would save people from lethal attacks made without human judgment or compassion. A clear ban on fully autonomous weapons would reinforce the longstanding moral and legal foundations of international humanitarian law articulated in the Martens Clause.

References

- ^ treaty on the laws of war (www.britannica.com)

- ^ Martens Clause (www.icrc.org)

- ^ new report (www.hrw.org)

- ^ Human Rights Watch (www.hrw.org)

- ^ Harvard Law School International Human Rights Clinic (hrp.law.harvard.edu)

- ^ weapons (theconversation.com)

- ^ Convention on Conventional Weapons (www.unog.ch)

- ^ Wikimedia Commons (commons.wikimedia.org)

- ^ all working to develop (www.stopkillerrobots.org)

- ^ 1899 Hague Convention (ihl-databases.icrc.org)

- ^ Geneva Conventions (www.icrc.org)

- ^ Additional Protocol I (ihl-databases.icrc.org)

- ^ numerous (ihl-databases.icrc.org)

- ^ disarmament (ihl-databases.icrc.org)

- ^ treaties (ihl-databases.icrc.org)

- ^ preemptive ban on blinding lasers (ihl-databases.icrc.org)

- ^ Pfc. Rhita Daniel, U.S. Marine Corps (commons.wikimedia.org)

- ^ 26 countries (www.stopkillerrobots.org)

- ^ Most countries (www.theguardian.com)

- ^ scientists and artificial intelligence experts (futureoflife.org)

- ^ pledge not to assist (futureoflife.org)

- ^ Major corporations (www.clearpathrobotics.com)

- ^ Luke MacGregor/Reuters (pictures.reuters.com)

- ^ faith leaders (www.paxforpeace.nl)

- ^ Nobel Peace Prize laureates (nobelwomensinitiative.org)

- ^ international (www.openroboethics.org)

- ^ national (duckofminerva.dreamhosters.com)

- ^ Campaign to Stop Killer Robots (www.stopkillerrobots.org)

- ^ threaten more (www.hrw.org)

Authors: Bonnie Docherty, Lecturer on Law and Associate Director of Armed Conflict and Civilian Protection, International Human Rights Clinic, Harvard Law School, Harvard University