Artificial intelligence outperforms the repetitive animal tests in identifying toxic chemicals

- Written by Thomas Hartung, Professor of Environmental Health and Engineering, Johns Hopkins University

Most consumers would be dismayed with how little we know about the majority of chemicals. Only 3 percent of industrial chemicals – mostly drugs and pesticides – are comprehensively tested. Most of the 80,000 to 140,000 chemicals in consumer products have not been tested at all or just examined superficially to see what harm they may do locally, at the site of contact and at extremely high doses.

I am a physician and former head of the European Center for the Validation of Alternative Methods of the European Commission[1] (2002-2008), and I am dedicated to finding faster, cheaper and more accurate methods of testing the safety of chemicals. To that end, I now lead a new program at Johns Hopkins University to revamp the safety sciences.

As part of this effort, we have now developed a computer method of testing chemicals that could save more than a US$1 billion annually and more than 2 million animals. Especially in times where the government is rolling back regulations[2] on the chemical industry, new methods to identify dangerous substances are critical for human and environmental health.

How the computer took over from the lab rat

Our computerized testing is possible because of Europe’s REACH[3] (Registration, Evaluation, Authorizations and Restriction of Chemicals) legislation: It was the first worldwide regulation to systematical log existing industrial chemicals. Over a period of one decade from 2008 to 2018, at least those chemicals produced or marketed at more than 1 ton per year in Europe had to be registered with increasing safety test information depending on the quantity sold.

Thousands of new chemicals are developed and used each year in consumer products without being tested for toxicity.

By Garsya/shutterstock.com[4]

Thousands of new chemicals are developed and used each year in consumer products without being tested for toxicity.

By Garsya/shutterstock.com[4]

Our team published a critical analysis[5] of European testing demands in 2009 that concluded the demands of the legislation could only be met by adopting new methods of chemical analysis. Europe does not track new chemicals below an annual market or production volume of 1 ton. But the similar size U.S. chemical industry brings about 1,000 chemicals at this tonnage range to the market each year. However, Europe does a much better job in requesting safety data. This also highlights how many new substances should be assessed every year even when they are produced in small quantities below 1 ton, which are not regulated in Europe. Inexpensive and fast computer methods lend themselves to this purpose.

Our group took advantage of the fact that REACH made its safety data on registered chemicals publicly available. In 2016, we reformatted the REACH data, making it machine-readable and creating the largest toxicological database[6] ever. It logged 10,000 chemicals and connected them to the 800,000 associated studies.

This laid the foundation for testing whether animals tests – considered the gold standard for safety testing – were reproducible. Some chemicals were tested surprisingly often in the same animal test. For example, two chemicals were tested more than 90 times in rabbit eyes; 69 chemicals were tested more than 45 times. This enormous waste of animals, however, enabled us to study whether these animal tests yielded consistent results.

Our analysis showed that these tests, which consume more than 2 million animals per year worldwide, are simply not very reliable – when tested in animals a chemical known to be toxic is only proven so in about 70 percent of repeated animal tests. These were animal tests done according to OECD test guidelines under Good Laboratory Practice – which is to say, the best you can get. This clearly shows that the quality of these tests is overrated and agencies must try alternative strategies to assess the toxicity of various compounds.

Big data more reliable than animal testing

Following the vision of Toxicology for the 21st Century[7], a movement led by U.S. agencies[8] to revamp safety testing, important work was carried out by my Ph.D. student Tom Luechtefeld at the Johns Hopkins Center for Alternatives to Animal Testing[9]. Teaming up with Underwriters Laboratories, we have now leveraged an expanded database and machine learning to predict toxic properties. As we report[10] in the journal Toxicological Sciences, we developed a novel algorithm and database for analyzing chemicals and determining their toxicity – what we call read-across structure activity relationship, RASAR.

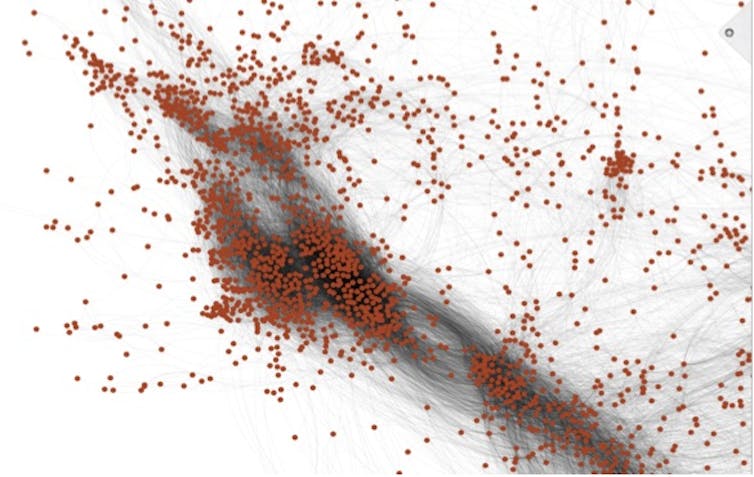

This graphic reveals a small part of the chemical universe. Each dot represents a different chemical. Chemicals that are close together have similar structures and often properties.

Thomas Hartung, CC BY-SA[11]

This graphic reveals a small part of the chemical universe. Each dot represents a different chemical. Chemicals that are close together have similar structures and often properties.

Thomas Hartung, CC BY-SA[11]

To do this, we first created an enormous database with 10 million chemical structures by adding more public databases filled with chemical data, which, if you crunch the numbers, represent 50 trillion pairs of chemicals. A supercomputer then created a map of the chemical universe, in which chemicals are positioned close together if they share many structures in common and far where they don’t. Most of the time, any molecule close to a toxic molecule is also dangerous. Even more likely if many toxic substances are close, harmless substances are far. Any substance can now be analyzed by placing it into this map.

If this sounds simple, it’s not. It requires half a billion mathematical calculations per chemical to see where it fits. The chemical neighborhood focuses on 74 characteristics which are used to predict the properties of a substance. Using the properties of the neighboring chemicals, we can predict whether an untested chemical is hazardous. For example, for predicting whether a chemical will cause eye irritation, our computer program not only uses information from similar chemicals, which were tested on rabbit eyes, but also information for skin irritation. This is because what typically irritates the skin also harms the eye.

How well does the computer identify toxic chemicals?

This method will be used for new untested substances. However, if you do this for chemicals for which you actually have data, and compare prediction with reality, you can test how well this prediction works. We did this for 48,000 chemicals that were well characterized for at least one aspect of toxicity, and we found the toxic substances in 89 percent of cases.

This is clearly more accurate that the corresponding animal tests which only yield the correct answer 70 percent of the time[12]. The RASAR shall now be formally validated by an interagency committee of 16 U.S. agencies, including the EPA and FDA, that will challenge our computer program with chemicals for which the outcome is unknown. This is a prerequisite for acceptance and use in many countries and industries.

The potential is enormous: The RASAR approach is in essence based on chemical data that was registered for the 2010 and 2013 REACH deadlines. If our estimates are correct and chemical producers would have not registered chemicals after 2013, and instead used our RASAR program, we would have saved 2.8 million animals and $490 million in testing costs – and received more reliable data. We have to admit that this is a very theoretical calculation, but it shows how valuable this approach could be for other regulatory programs and safety assessments.

In the future, a chemist could check RASAR before even synthesizing their next chemical to check whether the new structure will have problems. Or a product developer can pick alternatives to toxic substances to use in their products. This is a powerful technology, which is only starting to show all its potential.

References

- ^ I am a physician and former head of the European Center for the Validation of Alternative Methods of the European Commission (scholar.google.com)

- ^ government is rolling back regulations (www.houstonchronicle.com)

- ^ Europe’s REACH (ec.europa.eu)

- ^ By Garsya/shutterstock.com (www.shutterstock.com)

- ^ Our team published a critical analysis (doi.org)

- ^ the largest toxicological database (doi.org)

- ^ Toxicology for the 21st Century (doi.org)

- ^ a movement led by U.S. agencies (ncats.nih.gov)

- ^ Center for Alternatives to Animal Testing (caat.jhsph.edu)

- ^ As we report (doi.org)

- ^ CC BY-SA (creativecommons.org)

- ^ the correct answer 70 percent of the time (doi.org)

Authors: Thomas Hartung, Professor of Environmental Health and Engineering, Johns Hopkins University