Two gaps to fill for the 2021-2022 winter wave of COVID-19 cases

- Written by Maciej F. Boni, Associate Professor of Biology, Penn State

Epidemiologists – like oncologists and climate scientists – hate to be proved right. A year ago this week, the communications rush began from epidemiologists in academia to the public and to local governments about the imminent dangers of the COVID-19 pandemic, in the face of a weak federal response.

St. Patrick’s Day parades were canceled with days to spare. Hospitals were turning suspected positive cases away because of a lack of tests. Epidemiologists[1] predicted[2] that[3] hundreds of thousands Americans would die over the following year, with the upper boundaries above a million[4]. This was our country’s biggest challenge since 1941, and we did not meet it.

Despite the stream of bad news, a major success of 2020 was the pace of vaccine development. A 10-month sprint ending with completed phase 3 clinical trials for two vaccine candidates (and a third one[5] last week) is an incredible achievement. Uncredited here is the experience[6] gained[7] by the global health community during the rollout of clinical trials[8] in the West African Ebola epidemic in 2014-2015. Science during a crisis is difficult, and the scientific community responded in 2020 with an all-hands effort to design and initiate scores of trials on a moment’s notice.

But amid the scientific progress, what did we scientists neglect or get wrong? What will haunt us in eight months, when SARS-CoV-2 cases begin surging again, and we wonder if the winter epidemic trajectory will bring 30,000 or 300,000 more deaths? If vaccine efficacy drops, high death rates are a real possibility.

Our two big misses in 2020 were in behavioral modeling and real-time seroepidemiology[9], the study of antibody measurements in blood samples. As an epidemiologist[10] with experience in the field, lab and modeling aspects of pandemic response, I believe that we must address these two gaps for the U.S. to have better forecasting, better communication and better management next winter. Even with effective vaccines, the new coronavirus will be with us for many years.

Medical personnel move a deceased patient to a refrigerated truck serving as a makeshift morgue at Brooklyn Hospital Center on April 9, 2020, in New York City.

Angela Weiss/AFP via Getty Images, CC BY-SA[11][12]

Medical personnel move a deceased patient to a refrigerated truck serving as a makeshift morgue at Brooklyn Hospital Center on April 9, 2020, in New York City.

Angela Weiss/AFP via Getty Images, CC BY-SA[11][12]

Understanding human behavior

First, scientists do not understand the general feedback loop[13] between virus transmission and human behavior. When case and death numbers rise, people get fearful and comply more fully with common-sense recommendations like mask-wearing, distancing, hygiene, reduced contacts and no group events. But when these numbers fall, people feel safer and resume risky behavior, setting the stage for a new increase in cases.

In 2020, we public health experts missed an opportunity to quantify this dynamic and estimate the delay inherent in a population’s behavioral response. Even the recent decline in cases in January was attributed[14] to behavioral change by process of elimination only: It did not seem to be caused by weather, vaccines or new restrictions and was thus credited to human behavior and social distancing. But we still lack statistical evidence for how or when this started.

Why did we miss this? Epidemiologists had been preparing for a deadly pandemic for two decades. Our anchoring bias came from experiences with past influenza pandemics and hypothetical avian influenza pandemics[15], which have infection fatality ratios[16] (IFR) of either a very low 0.05% or very high rates of more than 25%. No one prepared for an intermediate IFR of 0.5%, where a virus could circulate unnoticed long enough for researchers to miss the first clinical signals – or a virus not gruesome enough to induce an immediate state of emergency.

Throughout the COVID-19 pandemic in the U.S., society’s reaction has wavered from urgency to complacency and back. Epidemiologists were not able to accurately predict these trends.

Going forward, we must develop data-centered models of population behavioral responses that occur during COVID-19 epidemics. For example, does experience with wintertime influenza act as an anchor, driving people to be more or less cautious as case numbers rise above or below “normal” flu rates? If scientists and public health experts can understand this behavior, we will know better when and how to institute new nonpharmaceutical interventions, such as gathering size limits or work-from-home orders. Then we will have better and more scientific justifications for early lockdowns and early interventions, with public health messaging stating clearly that an early lockdown means a short lockdown.

Behavioral modeling can also unlock the power of rapid at-home tests[17], a promising public health tool that received no coordinated support over the past year. How does someone react if others are infected? How do people react[18] if they themselves are infected? Without the foundational behavioral analysis in place, we will not know how to deploy at-home tests to best facilitate more careful mixing behavior.

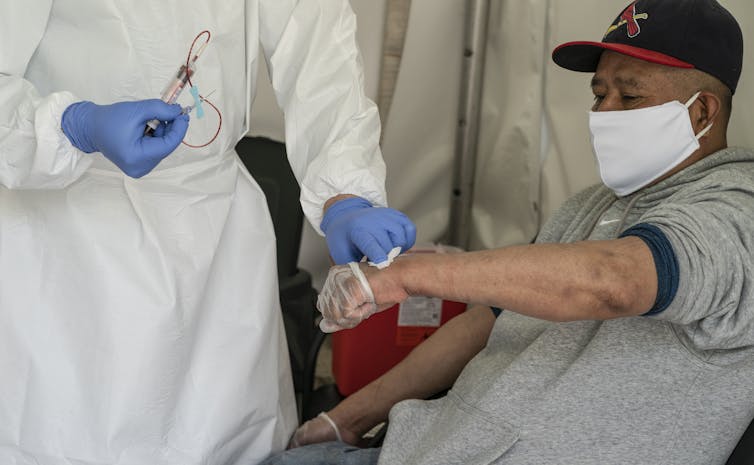

A laboratory technician takes an antibody test for COVID-19 at a community care center in New York City.

Lev Radin/Pacific Press/LightRocket via Getty Images, CC BY-SA[19][20]

A laboratory technician takes an antibody test for COVID-19 at a community care center in New York City.

Lev Radin/Pacific Press/LightRocket via Getty Images, CC BY-SA[19][20]

Faster processing of antibody data

Our second big miss was in real-time analyses of antibody data to gauge how many Americans have been infected with the coronavirus. Real-time case numbers[21], hospitalizations[22] and mobility[23] data have been crucial for understanding the different phases of the COVID-19 pandemic.

But serum collection was not preplanned, routine or processed quickly. Seroprevalence results then came[24] months[25] late[26], appearing in publications and preprints, but not aggregated in an easy-to-understand database.

With no universal standardization, misinterpretation of assay validations and serological thresholds was common. Scientists debated cross-sectional serology results, and study designs had no approach to correct for sampling biases[27] generated from correlations between past symptoms and study participation.

Today, we still lack confidence in estimates of the total number of Americans who have been infected, which complicates efforts to use the vaccine scale-up to accurately state what fraction of the country is now immune, or to plan for the inevitable outbreaks of new variants this spring and summer.

The key studies to prepare for next winter involve standardizing serological assays and estimating antibody[28] waning[29] rates[30] – that is, how quickly one’s antibody levels go down after infection. Measuring the post-infection waning of antibody concentrations allows us to define antibody thresholds for particular time points[31] after infection.

That’s a lot of jargon. Put more simply, if we know that antibodies wane to level X after three months, we can use this X to determine who has been infected in the past three months. This is not a true seroprevalence or attack-rate measurement, and that’s fine. It is a measure of the three-month attack rate or the six-month attack rate, depending on the threshold chosen, and it gives us an estimate of recent population-level infection rates. This new definition resolves the arbitrary threshold problem in serology, and allows studies to report the amount of recent population immunity, which is useful for public health decisions.

In my view, we should have learned in 2020 that it is never too early to start preparations for epidemic control. Summer 2020 was a missed opportunity to revamp our approach to health care and epidemic response. The U.S. cannot again squander an entire summer and fail to prepare for the possibility that SARS-CoV-2 has one more nasty winter in store for us.

References

- ^ Epidemiologists (works.bepress.com)

- ^ predicted (www.cbsnews.com)

- ^ that (theconversation.com)

- ^ above a million (www.imperial.ac.uk)

- ^ third one (www.fda.gov)

- ^ experience (doi.org)

- ^ gained (www.who.int)

- ^ clinical trials (doi.org)

- ^ seroepidemiology (link.springer.com)

- ^ epidemiologist (www.huck.psu.edu)

- ^ Angela Weiss/AFP via Getty Images (www.gettyimages.com)

- ^ CC BY-SA (creativecommons.org)

- ^ feedback loop (doi.org)

- ^ was attributed (twitter.com)

- ^ hypothetical avian influenza pandemics (doi.org)

- ^ infection fatality ratios (twitter.com)

- ^ rapid at-home tests (doi.org)

- ^ react (doi.org)

- ^ Lev Radin/Pacific Press/LightRocket via Getty Images (www.gettyimages.com)

- ^ CC BY-SA (creativecommons.org)

- ^ case numbers (coronavirus.jhu.edu)

- ^ hospitalizations (covidtracking.com)

- ^ mobility (www.covid19mobility.org)

- ^ came (doi.org)

- ^ months (doi.org)

- ^ late (doi.org)

- ^ sampling biases (www.buzzfeednews.com)

- ^ antibody (doi.org)

- ^ waning (wwwnc.cdc.gov)

- ^ rates (doi.org)

- ^ particular time points (www.taylorfrancis.com)

Authors: Maciej F. Boni, Associate Professor of Biology, Penn State

Read more https://theconversation.com/two-gaps-to-fill-for-the-2021-2022-winter-wave-of-covid-19-cases-156169