Gender is personal – not computational

- Written by Foad Hamidi, Postdoctoral Research Associate in Information Systems, University of Maryland, Baltimore County

Imagine walking down the street and seeing advertising screens change their content based on how you walk, how you talk, or even the shape of your chest. These screens rely on hidden cameras, microphones and computers to guess if you’re male or female. This might sound futuristic, but patrons in a Norwegian pizzeria discovered it’s exactly what was happening: Women were seeing ads for salad and men were seeing ads for meat options. The software running a digital advertising board spilled the beans when it crashed and displayed its underlying code[1]. The motivation behind using this technology might have been to improve advertising quality or user experience. Nevertheless, many customers were unpleasantly surprised by it.

This sort of situation is not just creepy and invasive. It’s worse: Efforts at automatic gender recognition[2] – using algorithms to guess a person’s gender based on images, video or audio – raise significant social and ethical concerns that are not yet fully explored. Most current research on automatic gender recognition technologies focuses instead on technological details.

Our[3] recent[4] research[5] found that people with diverse gender identities, including those identifying as transgender or gender nonbinary, are particularly concerned that these systems could miscategorize them[6]. People who express their gender differently from stereotypical male and female norms already experience discrimination and harm[7] as a result of being miscategorized or misunderstood[8]. Ideally, technology designers should develop systems to make these problems less common, not more so.

Using algorithms to classify people

As digital technologies become more powerful and sophisticated, their designers are trying to use them to identify and categorize complex human characteristics[9], such as sexual orientation, gender and ethnicity[10]. The idea is that with enough training on abundant user data[11], algorithms can learn to analyze people’s appearance and behavior – and perhaps one day characterize people as well as, or even better than, other humans do.

How machine learning works.Gender is a hard topic for people to handle. It’s a complex concept with important roles both as a cultural construct and a core aspect of an individual’s identity. Researchers, scholars and activists are increasingly revealing the diverse, fluid and multifaceted aspects of gender[12]. In the process, they find that ignoring this diversity can lead to both harmful experiences and social injustice[13]. For example, according to the 2016 National Transgender Survey[14], 47 percent of transgender participants stated that they had experienced some form of discrimination at their workplace due to their gender identity. More than half of transgender people who were harassed, assaulted or expelled because of their gender identity had attempted suicide.

Many people have, at one time or another, been surprised, or confused or even angered to find themselves mistaken for a person of another gender. When that happens to someone who is transgender – as an estimated 0.6 percent of Americans, or 1.4 million people[15], are – it can cause considerable stress and anxiety[16].

Effects of automatic gender recognition

In our recent research, we interviewed 13 transgender and gender-nonconforming people[17], about their general impressions of automatic gender recognition technology. We also asked them to describe their responses to imaginary future scenarios where they might encounter it. All 13 participants were worried about this technology and doubted whether it could offer their community any benefits.

Of particular concern was the prospect of being misgendered by it; in their experience, gender is largely an internal, subjective characteristic, not something that is necessarily or entirely expressed outwardly. Therefore, neither humans nor algorithms can accurately read gender through physical features, such as the face, body or voice.

They described how being misgendered by algorithms could potentially feel worse than if humans did it. Technology is often perceived or believed to be objective and unbiased[18], so being wrongly categorized by an algorithm would emphasize the misconception that a transgender identity is inauthentic. One participant described how they would feel hurt if a “million-dollar piece of software developed by however many people” decided that they are not who they themselves believe they are.

Privacy and transparency

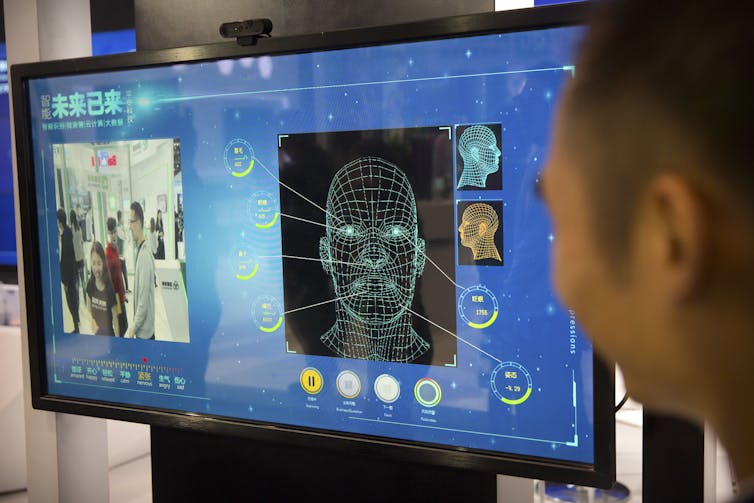

The people we interviewed shared the common public concern[19] that automated cameras could be used for surveillance without their consent or knowledge; for years, researchers and activists have raised red flags[20] about increasing threats to privacy in a world populated by sensors and cameras.

Facial recognition software can scan a crowd of people as they walk by.

AP Photo/Mark Schiefelbein[21]

Facial recognition software can scan a crowd of people as they walk by.

AP Photo/Mark Schiefelbein[21]

But our participants described how the effects of these technologies could be greater for transgender people. For instance, they might be singled out as unusual because they look or behave differently from what the underlying algorithms expect. Some participants were even concerned that systems might falsely determine that they are trying to be someone else and deceive the system.

Their concerns also extended to cisgender people who might look or act differently from the majority, such as people of different races, people the algorithms perceive as androgynous, and people with unique facial structures. This already happens to people from minority racial and ethnic backgrounds, who are regularly misidentified by facial recognition technology[22]. For example, existing facial recognition technology in some cameras fail to properly detect the faces of Asian users and send messages for them to stop blinking or to open their eyes[23].

Our interviewees wanted to know more about how automatic gender recognition systems work and what they’re used for. They didn’t want to know deep technical details, but did want to make sure the technology would not compromise their privacy or identity. They also wanted more transgender people involved in the early stages of design and development of these systems, well before they are deployed.

Creating inclusive automatic systems

Our results demonstrate how designers of automatic categorization technologies can inadvertently cause harm by making assumptions about the simplicity and predictability of human characteristics. Our research adds to a growing body of work[24] that attempts to more thoughtfully incorporate gender[25] into technology.

Minorities have historically been left out of conversations about large-scale technology deployment, including ethnic minorities and people with disabilities[26]. Yet, scientists and designers alike know that including input from minority groups during the design process can lead to technical innovations that benefit all people[27]. We advocate for a more gender-inclusive and human-centric approach to automation that incorporates diverse perspectives.

As digital technologies develop and mature, they can lead to impressive innovations. But as humans direct that work, they should avoid amplifying human biases and prejudices[28] that are negative and limiting. In the case of automatic gender recognition, we do not necessarily conclude that these algorithms should be abandoned. Rather, designers of these systems should be inclusive of, and sensitive to, the diversity and complexity of human identity.

References

- ^ crashed and displayed its underlying code (theoutline.com)

- ^ automatic gender recognition (doi.org)

- ^ Our (scholar.google.ca)

- ^ recent (scholar.google.com)

- ^ research (scholar.google.com)

- ^ these systems could miscategorize them (doi.org)

- ^ experience discrimination and harm (doi.org)

- ^ being miscategorized or misunderstood (www.healthline.com)

- ^ complex human characteristics (theconversation.com)

- ^ sexual orientation, gender and ethnicity (medium.com)

- ^ training on abundant user data (www.youtube.com)

- ^ diverse, fluid and multifaceted aspects of gender (newsroom.ucla.edu)

- ^ harmful experiences and social injustice (www.powells.com)

- ^ 2016 National Transgender Survey (www.thetaskforce.org)

- ^ 0.6 percent of Americans, or 1.4 million people (www.npr.org)

- ^ considerable stress and anxiety (www.healthline.com)

- ^ interviewed 13 transgender and gender-nonconforming people (doi.org)

- ^ objective and unbiased (fivethirtyeight.com)

- ^ common public concern (www.washingtontimes.com)

- ^ raised red flags (techcrunch.com)

- ^ AP Photo/Mark Schiefelbein (www.apimages.com)

- ^ misidentified by facial recognition technology (undark.org)

- ^ stop blinking or to open their eyes (content.time.com)

- ^ growing body of work (oliverhaimson.com)

- ^ thoughtfully incorporate gender (theconversation.com)

- ^ ethnic minorities and people with disabilities (www.huffingtonpost.com)

- ^ benefit all people (interactions.acm.org)

- ^ avoid amplifying human biases and prejudices (www.technologyreview.com)

Authors: Foad Hamidi, Postdoctoral Research Associate in Information Systems, University of Maryland, Baltimore County

Read more http://theconversation.com/gender-is-personal-not-computational-90798