High-tech surveillance amplifies police bias and overreach

- Written by Andrew Guthrie Ferguson, Professor of Law, American University

Video of police[1] in riot gear clashing with unarmed protesters in the wake of the killing of George Floyd by Minneapolis police officer Derek Chauvin has filled social media feeds. Meanwhile, police surveillance of protesters[2] has remained largely out of sight.

Local, state and federal law enforcement organizations use an array of surveillance technologies to identify and track protesters, from facial recognition[3] to military-grade drones[4].

Police use of these national security-style surveillance techniques – justified as cost-effective techniques that avoid human bias and error – has grown[5] hand-in-hand with the increased militarization of law enforcement. Extensive research, including my own[6], has shown that these expansive and powerful surveillance capabilities have exacerbated rather than reduced bias[7], overreach and abuse[8] in policing, and they pose a growing threat to civil liberties[9].

Police reform efforts are increasingly looking at law enforcement organizations’ use of surveillance technologies. In the wake of the current unrest, IBM[10], Amazon[11] and Microsoft[12] have put the brakes on police use of the companies’ facial recognition technology. And police reform bills submitted by the Democrats in the U.S. House of Representatives call for regulating police use of facial recognition[13] systems.

A decade of big data policing

We haven’t always lived in a world of police cameras, smart sensors and predictive analytics. Recession and rage fueled the initial rise of big data policing[14] technologies. In 2009, in the face of federal, state and local budget cuts[15] caused by the Great Recession, police departments began looking for ways to do more with less. Technology companies rushed to fill the gaps, offering new forms of data-driven policing[16] as models of efficiency and cost reduction.

Then, in 2014, the police killing of Michael Brown in Ferguson, Missouri, upended already fraying police and community relationships. The killings of Michael Brown, Eric Garner, Philando Castile, Tamir Rice, Walter Scott, Sandra Bland, Freddie Gray and George Floyd all sparked nationwide protests and calls for racial justice[17] and police reform[18]. Policing was driven into crisis mode as community outrage threatened to delegitimize the existing police power structure.

In response to the twin threats of cost pressures and community criticism, police departments further embraced startup technology companies[19] selling big data efficiencies and the hope that something “data-driven” would allow communities to move beyond the all-too-human problems of policing. Predictive analytics and bodycam video capabilities were sold as objective solutions[20] to racial bias. In large measure, the public relations strategy worked, which has allowed law enforcement to embrace predictive policing[21] and increased digital surveillance[22].

A law enforcement drone flew over demonstrators, Friday, June 5, 2020, in Atlanta.

AP Photo/Mike Stewart[23]

A law enforcement drone flew over demonstrators, Friday, June 5, 2020, in Atlanta.

AP Photo/Mike Stewart[23]

Today, in the midst of renewed outrage against structural racism and police brutality, and in the shadow of an even deeper economic recession, law enforcement organizations face the same temptation to adopt a technology-based solution to deep societal problems. Police chiefs are likely to want to turn the page from the current levels of community anger and distrust.

The dangers of high-tech surveillance

Instead of repeating the mistakes of the past 12 years or so, communities have an opportunity to reject the expansion of big data policing. The dangers have only increased, the harms made plain by experience.

Those small startup companies that initially rushed into the policing business have been replaced by big technology companies[24] with deep pockets and big ambitions.

Axon[25] capitalized on the demands for police accountability after the protests in Ferguson and Baltimore to become a multimillion dollar company providing digital services for police-worn body cameras. Amazon has been expanding partnerships[26] with hundreds of police departments through its Ring cameras[27] and Neighbors App[28]. Other companies like BriefCam, Palantir and Shotspotter offer a host of video analytics[29], social network analysis[30] and other sensor technologies[31] with the ability to sell technology cheaply in the short run with the hope for long term market advantage.

The technology itself is more powerful. The algorithmic models created a decade ago pale in comparison to machine learning capabilities today. Video camera streams have been digitized and augmented with analytics[32] and facial recognition[33] capabilities, turning static surveillance into a virtual time machine[34] to find patterns in crowds. Adding to the data trap are smartphones[35], smart homes[36] and smart cars[37], which now allow police to uncover individuals’ digital trails with relative ease.

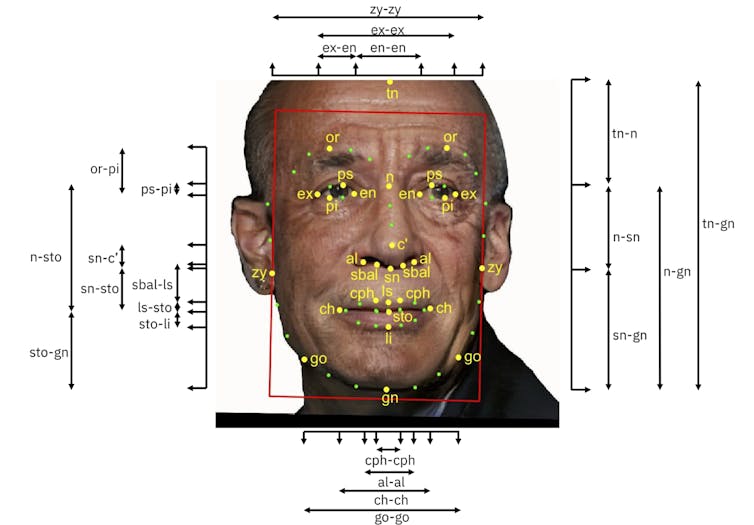

Researchers have been working to overcome widespread racial bias in facial recognition.

IBM Research/Flickr, CC BY-ND[38][39]

Researchers have been working to overcome widespread racial bias in facial recognition.

IBM Research/Flickr, CC BY-ND[38][39]

The technology is more interconnected. One of the natural limiting factors of first generation big data policing technology was the fact that it remained siloed. Databases could not communicate with one another. Data could not be easily shared. That limiting factor has shrunk as more aggregated data systems have been developed within government[40] and by private vendors[41].

The promise of objective, unbiased technology didn’t pan out. Race bias in policing[42] was not fixed by turning on a camera. Instead the technology created new problems, including highlighting the lack of accountability[43] for high-profile instances of police violence.

Lessons for reining in police spying

The harms of big data policing have been repeatedly exposed. Programs that attempted to predict individuals’ behaviors in Chicago[44] and Los Angeles[45] have been shut down after devastating audits[46] cataloged their discriminatory impact and practical failure. Place-based predictive systems[47] have been shut down[48] in Los Angeles and other cities that initially had adopted the technology. Scandals involving facial recognition[49], social network analysis technology[50] and large-scale sensor surveillance[51] serve as a warning that technology cannot address the deeper issues of race, power and privacy that lie at the heart of modern-day policing.

The lesson of the first era[52] of big data policing is that issues of race, transparency and constitutional rights must be at the forefront of design, regulation and use. Every mistake[53] can be traced to a failure to see how the surveillance technology fits within the context of modern police power – a context that includes longstanding issues of racism and social control. Every solution points to addressing that power imbalance at the front end[54], through local oversight, community engagement[55] and federal law[56], not after the technology has been adopted.

The debates about defunding[57], demilitarizing[58] and reimagining[59] existing law enforcement practices must include a discussion about police surveillance. There are a decade of missteps to learn from and era-defining privacy and racial justice challenges ahead. How police departments respond to the siren call of big data surveillance[60] will reveal whether they’re on course to repeat the same mistakes.

[Get our best science, health and technology stories. Sign up for The Conversation’s science newsletter[61].]

References

- ^ Video of police (time.com)

- ^ police surveillance of protesters (www.marketplace.org)

- ^ facial recognition (www.google.com)

- ^ military-grade drones (www.vox.com)

- ^ has grown (theintercept.com)

- ^ including my own (nyupress.org)

- ^ bias (www.axios.com)

- ^ overreach and abuse (www.theatlantic.com)

- ^ threat to civil liberties (www.pbs.org)

- ^ IBM (www.technologyreview.com)

- ^ Amazon (www.wired.com)

- ^ Microsoft (www.nbcnews.com)

- ^ regulating police use of facial recognition (www.protocol.com)

- ^ big data policing (thecrimereport.org)

- ^ budget cuts (www.ncdsv.org)

- ^ new forms of data-driven policing (www.siliconvalley.com)

- ^ racial justice (www.cbsnews.com)

- ^ police reform (www.theatlantic.com)

- ^ startup technology companies (www.smithsonianmag.com)

- ^ objective solutions (www.governing.com)

- ^ predictive policing (time.com)

- ^ digital surveillance (theintercept.com)

- ^ AP Photo/Mike Stewart (www.apimages.com)

- ^ big technology companies (www.nytimes.com)

- ^ Axon (theappeal.org)

- ^ Amazon has been expanding partnerships (www.nbcnews.com)

- ^ Ring cameras (www.washingtonpost.com)

- ^ Neighbors App (www.vice.com)

- ^ video analytics (www.vice.com)

- ^ social network analysis (www.wired.com)

- ^ sensor technologies (www.govtech.com)

- ^ analytics (slate.com)

- ^ facial recognition (www.nbcnews.com)

- ^ virtual time machine (scholarship.law.upenn.edu)

- ^ smartphones (www.nytimes.com)

- ^ smart homes (papers.ssrn.com)

- ^ smart cars (www.washingtonpost.com)

- ^ IBM Research/Flickr (www.flickr.com)

- ^ CC BY-ND (creativecommons.org)

- ^ within government (www.fbi.gov)

- ^ by private vendors (www.thetrace.org)

- ^ Race bias in policing (www.propublica.org)

- ^ lack of accountability (www.vox.com)

- ^ Chicago (www.chicagotribune.com)

- ^ Los Angeles (www.courthousenews.com)

- ^ audits (www.cnn.com)

- ^ Place-based predictive systems (www.theatlantic.com)

- ^ shut down (www.buzzfeednews.com)

- ^ facial recognition (www.nytimes.com)

- ^ social network analysis technology (www.nola.com)

- ^ sensor surveillance (www.citylab.com)

- ^ lesson of the first era (www.economist.com)

- ^ mistake (papers.ssrn.com)

- ^ the front end (www.thealiadviser.org)

- ^ community engagement (www.measureaustin.org)

- ^ federal law (www.vice.com)

- ^ defunding (www.thenation.com)

- ^ demilitarizing (www.nytimes.com)

- ^ reimagining (www.theatlantic.com)

- ^ big data surveillance (www.asanet.org)

- ^ Sign up for The Conversation’s science newsletter (theconversation.com)

Authors: Andrew Guthrie Ferguson, Professor of Law, American University

Read more https://theconversation.com/high-tech-surveillance-amplifies-police-bias-and-overreach-140225