Misinformation and biases infect social media, both intentionally and accidentally

- Written by Giovanni Luca Ciampaglia, Assistant Research Scientist, Indiana University Network Science Institute, Indiana University

Social media are among the primary sources of news in the U.S.[1] and across the world. Yet users are exposed to content of questionable accuracy, including conspiracy theories[2], clickbait[3], hyperpartisan content[4], pseudo science[5] and even fabricated “fake news” reports[6].

It’s not surprising that there’s so much disinformation published: Spam and online fraud are lucrative for criminals[7], and government and political propaganda yield both partisan and financial benefits[8]. But the fact that low-credibility content spreads so quickly and easily[9] suggests that people and the algorithms behind social media platforms are vulnerable to manipulation.

Explaining the tools developed at the Observatory on Social Media.Our research has identified three types of bias that make the social media ecosystem vulnerable to both intentional and accidental misinformation. That is why our Observatory on Social Media[10] at Indiana University is building tools[11] to help people become aware of these biases and protect themselves from outside influences designed to exploit them.

Bias in the brain

Cognitive biases originate in the way the brain processes the information that every person encounters every day. The brain can deal with only a finite amount of information, and too many incoming stimuli can cause information overload[12]. That in itself has serious implications for the quality of information on social media. We have found that steep competition for users’ limited attention means that some ideas go viral despite their low quality[13] – even when people prefer to share high-quality content[14].

To avoid getting overwhelmed, the brain uses a number of tricks[15]. These methods are usually effective, but may also become biases[16] when applied in the wrong contexts.

One cognitive shortcut happens when a person is deciding whether to share a story that appears on their social media feed. People are very affected by the emotional connotations of a headline[17], even though that’s not a good indicator of an article’s accuracy. Much more important is who wrote the piece[18].

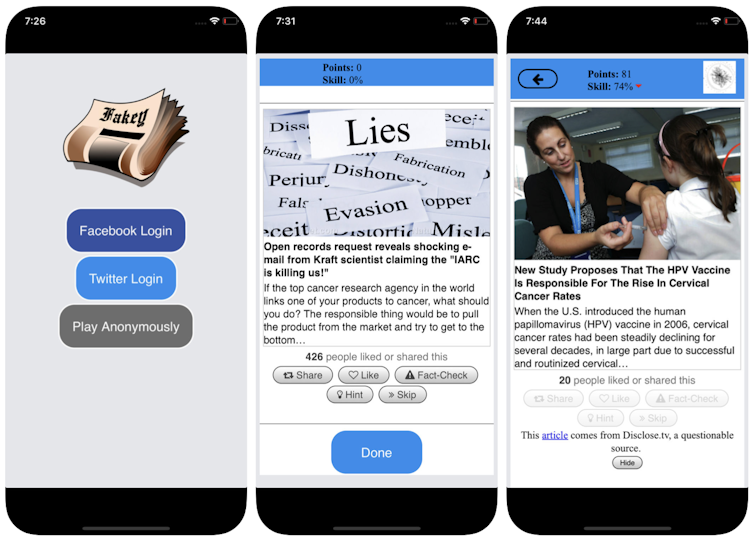

To counter this bias, and help people pay more attention to the source of a claim before sharing it, we developed Fakey[19], a mobile news literacy game (free on Android[20] and iOS[21]) simulating a typical social media news feed, with a mix of news articles from mainstream and low-credibility sources. Players get more points for sharing news from reliable sources and flagging suspicious content for fact-checking. In the process, they learn to recognize signals of source credibility, such as hyperpartisan claims and emotionally charged headlines.

Screenshots of the Fakey game.

Mihai Avram and Filippo Menczer

Screenshots of the Fakey game.

Mihai Avram and Filippo Menczer

Bias in society

Another source of bias comes from society. When people connect directly with their peers, the social biases that guide their selection of friends come to influence the information they see.

In fact, in our research we have found that it is possible to determine the political leanings of a Twitter user[22] by simply looking at the partisan preferences of their friends. Our analysis of the structure of these partisan communication networks[23] found social networks are particularly efficient at disseminating information – accurate or not – when they are closely tied together and disconnected from other parts of society[24].

The tendency to evaluate information more favorably if it comes from within their own social circles creates “echo chambers[25]” that are ripe for manipulation, either consciously or unintentionally. This helps explain why so many online conversations devolve into “us versus them” confrontations[26].

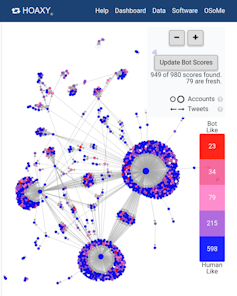

To study how the structure of online social networks makes users vulnerable to disinformation, we built Hoaxy[27], a system that tracks and visualizes the spread of content from low-credibility sources, and how it competes with fact-checking content. Our analysis of the data collected by Hoaxy during the 2016 U.S. presidential elections shows that Twitter accounts that shared misinformation were almost completely cut off[28] from the corrections made by the fact-checkers.

When we drilled down on the misinformation-spreading accounts, we found a very dense core group of accounts retweeting each other almost exclusively – including several bots. The only times that fact-checking organizations were ever quoted or mentioned by the users in the misinformed group were when questioning their legitimacy or claiming the opposite of what they wrote.

A screenshot of a Hoaxy search shows how common bots – in red and dark pink – are spreading a false story on Twitter.

Hoaxy

A screenshot of a Hoaxy search shows how common bots – in red and dark pink – are spreading a false story on Twitter.

Hoaxy

Bias in the machine

The third group of biases arises directly from the algorithms used to determine what people see online. Both social media platforms and search engines employ them. These personalization technologies are designed to select only the most engaging and relevant content for each individual user. But in doing so, it may end up reinforcing the cognitive and social biases of users, thus making them even more vulnerable to manipulation.

For instance, the detailed advertising tools built into many social media platforms[29] let disinformation campaigners exploit confirmation bias[30] by tailoring messages[31] to people who are already inclined to believe them.

Also, if a user often clicks on Facebook links from a particular news source, Facebook will tend to show that person more of that site’s content[32]. This so-called “filter bubble[33]” effect may isolate people from diverse perspectives, strengthening confirmation bias.

Our own research shows that social media platforms expose users to a less diverse set of sources than do non-social media sites like Wikipedia. Because this is at the level of a whole platform, not of a single user, we call this the homogeneity bias[34].

Another important ingredient of social media is information that is trending on the platform, according to what is getting the most clicks. We call this popularity bias[35], because we have found that an algorithm designed to promote popular content may negatively affect the overall quality of information on the platform. This also feeds into existing cognitive bias, reinforcing what appears to be popular irrespective of its quality.

All these algorithmic biases can be manipulated by social bots[36], computer programs that interact with humans through social media accounts. Most social bots, like Twitter’s Big Ben[37], are harmless. However, some conceal their real nature and are used for malicious intents, such as boosting disinformation[38] or falsely creating the appearance of a grassroots movement[39], also called “astroturfing.” We found evidence of this type of manipulation[40] in the run-up to the 2010 U.S. midterm election.

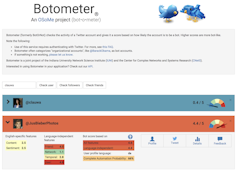

A screenshot of the Botometer website, showing one human and one bot account.

Botometer

A screenshot of the Botometer website, showing one human and one bot account.

Botometer

To study these manipulation strategies, we developed a tool to detect social bots called Botometer[41]. Botometer uses machine learning to detect bot accounts, by inspecting thousands of different features of Twitter accounts, like the times of its posts, how often it tweets, and the accounts it follows and retweets. It is not perfect, but it has revealed that as many as 15 percent of Twitter accounts show signs of being bots[42].

Using Botometer in conjunction with Hoaxy, we analyzed the core of the misinformation network during the 2016 U.S. presidential campaign. We found many bots exploiting both the cognitive, confirmation and popularity biases of their victims and Twitter’s algorithmic biases.

These bots are able to construct filter bubbles around vulnerable users, feeding them false claims and misinformation. First, they can attract the attention of human users who support a particular candidate by tweeting that candidate’s hashtags or by mentioning and retweeting the person. Then the bots can amplify false claims smearing opponents by retweeting articles from low-credibility sources that match certain keywords. This activity also makes the algorithm highlight for other users false stories that are being shared widely.

Even as our research, and others’, shows how individuals, institutions and even entire societies can be manipulated on social media, there are many questions[43] left to answer. It’s especially important to discover how these different biases interact with each other, potentially creating more complex vulnerabilities.

Tools like ours offer internet users more information about disinformation, and therefore some degree of protection from its harms. The solutions will not likely be only technological[44], though there will probably be some technical aspects to them. But they must take into account the cognitive and social aspects[45] of the problem.

References

- ^ primary sources of news in the U.S. (www.journalism.org)

- ^ conspiracy theories (conspiracypsychology.com)

- ^ clickbait (www.polygon.com)

- ^ hyperpartisan content (www.pbs.org)

- ^ pseudo science (www.newyorker.com)

- ^ fabricated “fake news” reports (www.smithsonianmag.com)

- ^ are lucrative for criminals (www.symantec.com)

- ^ both partisan and financial benefits (www.ned.org)

- ^ low-credibility content spreads so quickly and easily (doi.org)

- ^ Observatory on Social Media (osome.iuni.iu.edu)

- ^ tools (osome.iuni.iu.edu)

- ^ information overload (hbr.org)

- ^ some ideas go viral despite their low quality (doi.org)

- ^ even when people prefer to share high-quality content (doi.org)

- ^ number of tricks (global.oup.com)

- ^ become biases (www.psychologytoday.com)

- ^ very affected by the emotional connotations of a headline (doi.org)

- ^ who wrote the piece (digitalliteracy.cornell.edu)

- ^ Fakey (fakey.iuni.iu.edu)

- ^ Android (play.google.com)

- ^ iOS (itunes.apple.com)

- ^ determine the political leanings of a Twitter user (doi.org)

- ^ partisan communication networks (www.aaai.org)

- ^ they are closely tied together and disconnected from other parts of society (doi.org)

- ^ echo chambers (arstechnica.com)

- ^ “us versus them” confrontations (www.pewinternet.org)

- ^ Hoaxy (hoaxy.iuni.iu.edu)

- ^ almost completely cut off (journals.plos.org)

- ^ advertising tools built into many social media platforms (theconversation.com)

- ^ confirmation bias (www.psychologytoday.com)

- ^ tailoring messages (www.washingtonpost.com)

- ^ tend to show that person more of that site’s content (www.wired.com)

- ^ filter bubble (www.brainpickings.org)

- ^ homogeneity bias (doi.org)

- ^ popularity bias (arxiv.org)

- ^ social bots (cacm.acm.org)

- ^ Big Ben (twitter.com)

- ^ boosting disinformation (newsroom.fb.com)

- ^ creating the appearance of a grassroots movement (www.businessinsider.com)

- ^ evidence of this type of manipulation (www.aaai.org)

- ^ Botometer (botometer.org)

- ^ 15 percent of Twitter accounts show signs of being bots (aaai.org)

- ^ many questions (doi.org)

- ^ not likely be only technological (www.hewlett.org)

- ^ the cognitive and social aspects (doi.org)

Authors: Giovanni Luca Ciampaglia, Assistant Research Scientist, Indiana University Network Science Institute, Indiana University