Apple's plan to scan your phone raises the stakes on a key question: Can you trust Big Tech?

- Written by Laurin Weissinger, Lecturer in Cybersecurity, Tufts University

Apple’s plan to scan customers’ phones and other devices[1] for images depicting child sexual abuse generated a backlash[2] over privacy concerns, which led the company to announce a delay[3].

Apple, Facebook, Google and other companies have long scanned customers’ images that are stored on the companies’ servers for this material. Scanning data on users’ devices is a significant change[4].

However well-intentioned, and whether or not Apple is willing and able to follow through on its promises to protect customers’ privacy, the company’s plan highlights the fact that people who buy iPhones are not masters of their own devices. In addition, Apple is using a complicated scanning system that is hard to audit[5]. Thus, customers face a stark reality: If you use an iPhone, you have to trust Apple.

Specifically, customers are forced to trust Apple to only use this system as described, run the system securely over time, and put the interests of their users over the interests of other parties, including the most powerful governments on the planet.

Despite Apple’s so-far-unique plan, the problem of trust isn’t specific to Apple. Other large tech companies also have considerable control over customers’ devices and insight into their data.

What is trust?

Trust is “the willingness of a party to be vulnerable to the actions of another party[6],” according to social scientists. People base the decision to trust on experience, signs and signals. But past behavior, promises, the way someone acts, evidence and even contracts only give you data points. They cannot guarantee future action.

Therefore, trust is a matter of probabilities. You are, in a sense, rolling the dice whenever you trust someone or an organization.

Trustworthiness is a hidden property. People collect information about someone’s likely future behavior, but cannot know for sure whether the person has the ability to stick to their word, is truly benevolent and has the integrity - principles, processes and consistency - to maintain their behavior over time, under pressure or when the unexpected occurs.

Trust in Apple and Big Tech

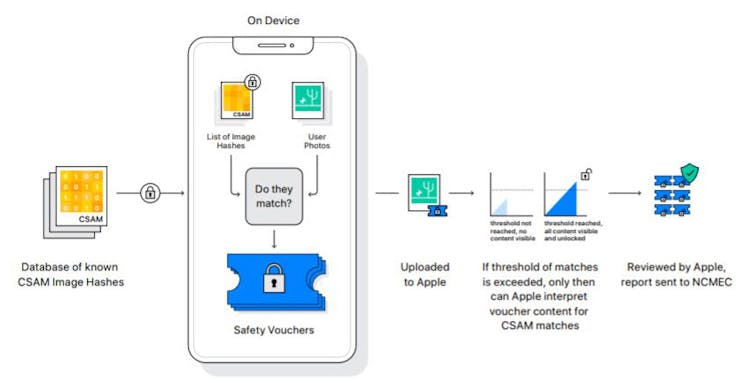

Apple has stated that their scanning system will only ever be used for detecting child sexual abuse material[7] and has multiple strong privacy protections. The technical details of the system[8] indicate that Apple has taken steps to protect user privacy unless the targeted material is detected by the system. For example, humans will review someone’s suspect material only when the number of times the system detects the targeted material reaches a certain threshold. However, Apple has given little proof regarding how this system will work in practice.

Apple’s new system for comparing your photos with a database of known images of child abuse works on your device rather than on a server.

courtesy Apple[9]

Apple’s new system for comparing your photos with a database of known images of child abuse works on your device rather than on a server.

courtesy Apple[9]

After analyzing the “NeuralHash” algorithm[10] that Apple is basing its scanning system on, security researchers and civil rights organizations warn that the system is likely vulnerable[11] to hackers, in contrast to Apple’s claims[12].

Critics also fear that the system will be used to scan for other material[13], such as indications of political dissent. Apple, along with other Big Tech players, has caved to the demands of authoritarian regimes, notably China, to allow government surveillance of technology users. In practice, the Chinese government has access to all user data[14]. What will be different this time?

It should also be noted that Apple is not operating this system on its own. In the U.S., Apple plans to use data from, and report suspect material to, the nonprofit National Center for Missing and Exploited Children[15]. Thus, trusting Apple is not enough. Users must also trust the company’s partners to act benevolently and with integrity.

Big Tech’s less-than-encouraging track record

This case exists within a context of regular Big Tech privacy invasions[16] and moves to further curtail consumer freedoms and control[17]. The companies have positioned themselves as responsible parties, but many privacy experts say there is too little transparency and scant technical or historical evidence for these claims.

Another concern is unintended consequences. Apple might really want to protect children and protect users’ privacy at the same time. Nevertheless, the company has now announced – and staked its trustworthiness to – a technology that is well-suited to spying on large numbers of people. Governments might pass laws to extend scanning to other material deemed illegal.

Would Apple, and potentially other tech firms, choose to not follow these laws and potentially pull out of these markets, or would they comply with potentially draconian local laws[18]? There’s no telling about the future, but Apple and other tech firms have chosen to acquiesce to oppressive regimes before. Tech companies that choose to operate in China are forced to submit to censorship[19], for example.

Weighing whether to trust Apple or other tech companies

There’s no single answer to the question of whether Apple, Google or their competitors can be trusted. Risks are different depending on who you are and where you are in the world. An activist in India faces different threats and risks than an Italian defense lawyer. Trust is a matter of probabilities, and risks are not only probabilistic but also situational.

It’s a matter of what probability of failure or deception you can live with, what the relevant threats and risks are, and what protections or mitigations exist. Your government’s position[20], the existence of strong local privacy laws, the strength of rule of law and your own technical ability are relevant factors. Yet, there is one thing you can count on: Tech firms typically have extensive control over your devices and data.

Like all large organizations, tech firms are complex: Employees and management come and go, and regulations, policies and power dynamics change.

A company might be trustworthy today but not tomorrow.

[Over 100,000 readers rely on The Conversation’s newsletter to understand the world. Sign up today[21].]

Big Tech has shown behaviors in the past that should make users question their trustworthiness, in particular when it comes to privacy violations. But they have also defended user privacy in other cases, for example in the San Bernadino mass shooting case and subsequent debates about encryption[22].

Last but not least, Big Tech does not exist in a vacuum and is not all-powerful. Apple, Google, Microsoft, Amazon, Facebook and others have to respond to various external pressures and powers. Perhaps, considering these circumstances, greater transparency, more independent audits by journalists and trusted people in civil society, more user control, more open-source code and genuine discourse with customers might be a good start to balance different objectives.

While only a first step, consumers would at least be able to make more informed choices about what products to use or buy.

References

- ^ scan customers’ phones and other devices (www.apple.com)

- ^ backlash (arstechnica.com)

- ^ announce a delay (techcrunch.com)

- ^ significant change (www.lawfareblog.com)

- ^ that is hard to audit (www.eff.org)

- ^ vulnerable to the actions of another party (doi.org)

- ^ only ever be used for detecting child sexual abuse material (www.cnbc.com)

- ^ technical details of the system (www.apple.com)

- ^ courtesy Apple (www.apple.com)

- ^ NeuralHash” algorithm (pocketnow.com)

- ^ vulnerable (www.schneier.com)

- ^ Apple’s claims (www.theverge.com)

- ^ used to scan for other material (www.theguardian.com)

- ^ access to all user data (www.nytimes.com)

- ^ National Center for Missing and Exploited Children (www.missingkids.org)

- ^ regular Big Tech privacy invasions (theconversation.com)

- ^ further curtail consumer freedoms and control (www.forbes.com)

- ^ or would they comply with potentially draconian local laws (www.forbes.com)

- ^ forced to submit to censorship (www.businessinsider.com)

- ^ Your government’s position (9to5mac.com)

- ^ Sign up today (theconversation.com)

- ^ San Bernadino mass shooting case and subsequent debates about encryption (www.npr.org)

Authors: Laurin Weissinger, Lecturer in Cybersecurity, Tufts University