Self-driving cars and humans face inevitable collisions

- Written by Peter Hancock, Professor of Psychology, Civil and Environmental Engineering, and Industrial Engineering and Management Systems, University of Central Florida

In 1938, when there were just about one-tenth the number of cars[1] on U.S. roadways as there are today[2], a brilliant psychologist and a pragmatic engineer joined forces to write one of the most influential works[3] ever published on driving. A self-driving car’s killing of a pedestrian[4] in Arizona highlights how their work is still relevant today – especially regarding the safety of automated and autonomous vehicles[5].

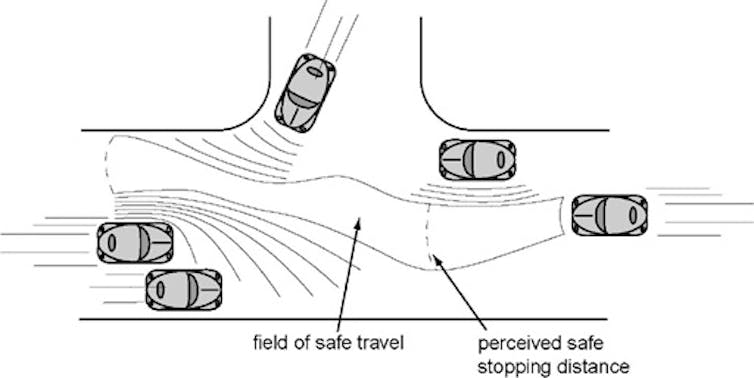

James Gibson, the psychologist in question, and the engineer Laurence Crooks, his partner, evaluated a driver’s control of a vehicle in two ways. The first was to measure what they called the “minimum stopping zone,” the distance it would take to stop after the driver slammed on the brakes. The second was to look at the driver’s psychological perception of the possible hazards around the vehicle, which they called the “field of safe travel[6].” If someone drove so that all the potential hazards were outside the range needed to stop the car, that person was driving safely. Unsafe driving, on the other hand, involved going so quickly or steering so erratically that the car couldn’t stop before potentially hitting those identified hazards.

The driver of the car on the right judges a safe path through obstacles based on their movements and perceived stopping distances.

Adapted from Gibson and Crooks by Steven Lehar[7]

The driver of the car on the right judges a safe path through obstacles based on their movements and perceived stopping distances.

Adapted from Gibson and Crooks by Steven Lehar[7]

However, this field of safe travel isn’t the same for driverless cars. They perceive the world around them using lasers, radar, GPS and other sensors, in addition to their on-board cameras. So their perceptions can be very different from those presented to human eyes. At the same time, their active response times can be far faster – or sometimes even excessively slow, in cases where they require human intervention[8].

I have written extensively on the nature of human interaction with technology[9], especially concerning the coming wave of automated automobiles[10]. It’s clear to me that, if people and machines drive only according to their respective – and significantly different – perceptual and response abilities, then conflicts and collisions will be almost inevitable. To share the road safely, each side will need to understand the other much more intimately than they do now.

Interplay of movement and view

For human drivers, vision is king. But what drivers see depends on how they move the car: Braking, accelerating and steering change the car’s position and thereby the driver’s view. Gibson understood[11] this mutual interdependence of perception and action[12] meant that when faced with a particular situation on the road, people expect others to behave in specific ways. For instance, a person watching a car arrive at a stop sign would expect the driver to stop the car; look around for oncoming traffic, pedestrians, bicyclists and other obstacles; and proceed only when the coast is clear.

A stop sign clearly exists for human drivers. It gives them a chance to look around carefully without being distracted by other aspects of driving, like steering. But an autonomous vehicle can scan its entire surroundings in a fraction of a second. It need not necessarily stop – or even slow down – to navigate the intersection safely. But an autonomous car that rolls through a stop sign without even pausing will be seen as alarming, and even dangerous, to nearby humans, because they’re assuming human rules still apply.

What machines can understand

Here’s another example: Think about cars merging from a side street onto a busy thoroughfare. People know that making eye contact[13] with another driver can be an effective method of communicating with each other. In a split section, one driver can ask permission to cut in and the other driver can acknowledge that yes, she will yield to make room. How exactly should people have this interaction with a self-driving car? It’s something that has yet to be established.

Pedestrians, bicyclists, motorcycle riders, car drivers and truck drivers are all able to understand what other human drivers are likely to do – and to express their own intentions to another person appropriately.

An automated vehicle is another matter altogether. It will know little or nothing of the “can I?” “yes, OK” types of informal interaction people engage in every day, and will be stuck only with the specific rules it has been provided. Since few algorithms can understand these implicit human assumptions, they’ll behave differently from how people expect. Some of these differences might seem subtle – but some transgressions, such as running the stop sign, might cause injury or even death.

What’s more, driverless cars can be effectively blinded if their various sensory systems become blocked[14], malfunction or provide contradictory information. In the 2016 fatal crash of a Tesla in “Autopilot” mode, for example, part of the problem might have been a conflict between some sensors that could have detected a tractor-trailer across the road and others that likely didn’t because it was backlit or too high off the ground[15]. These failures may be rather different from the shortcomings people have come to expect from fellow humans.

A Tesla ‘Autopilot’ system steers directly for a barricade.As with all new technologies, there will be accidents and problems – and on the roads, that will almost inevitably result in injury and death. But this type of problem isn’t unique to self-driving cars. Rather, it’s perhaps inherent in any situation[16] when humans and automated systems share space.

References

- ^ one-tenth the number of cars (www.fhwa.dot.gov)

- ^ as there are today (www.fhwa.dot.gov)

- ^ most influential works (www.worldcat.org)

- ^ killing of a pedestrian (www.cnet.com)

- ^ safety of automated and autonomous vehicles (theconversation.com)

- ^ field of safe travel (doi.org)

- ^ Adapted from Gibson and Crooks by Steven Lehar (cns-alumni.bu.edu)

- ^ require human intervention (dx.doi.org)

- ^ human interaction with technology (doi.org)

- ^ coming wave of automated automobiles (theconversation.com)

- ^ Gibson understood (www.worldcat.org)

- ^ mutual interdependence of perception and action (www.learning-theories.com)

- ^ making eye contact (www.wsj.com)

- ^ various sensory systems become blocked (www.flyingmag.com)

- ^ backlit or too high off the ground (www.theverge.com)

- ^ inherent in any situation (doi.org)

Authors: Peter Hancock, Professor of Psychology, Civil and Environmental Engineering, and Industrial Engineering and Management Systems, University of Central Florida

Read more http://theconversation.com/self-driving-cars-and-humans-face-inevitable-collisions-93774