The battle against disinformation is global

- Written by Scott Shackelford, Associate Professor of Business Law and Ethics; Director, Ostrom Workshop Program on Cybersecurity and Internet Governance; Cybersecurity Program Chair, IU-Bloomington, Indiana University

Disinformation-spewing online bots and trolls from halfway around the world are continuing to shape local and national debates[1] by spreading lies online on a massive scale. In 2019, Russia used Facebook to intervene[2] in the internal politics of eight African nations.

Russia has a long history[3] of using disinformation campaigns to undermine opponents – even hoodwinking CBS News anchor Dan Rather[4] back in 1987 into saying that U.S. biological warfare experiments sparked the AIDS epidemic[5].

One group of researchers identified Russian interference in 27 elections[6] around the world, from 1991 to 2017. It interfered[7] in the 2016 U.S. elections, reaching more than 126 million Americans[8] on Facebook alone. Russia is almost certainly[9] already[10] doing so[11] again in 2020.

But Russia is not alone: From the end of World War II to the year 2000, scholars have documented 116 attempts to influence elections[12] – 80 of them by the United States.

Nations around the world, including the United States, have to decide how to react[13]. There is no shortage of experimentation, with new laws and codes of conduct, and even efforts to cut off internet access entirely – and that was before misinformation regarding the COVID-19 pandemic[14].

As a scholar[15] of cybersecurity policy, I have been reviewing the efforts of nations around the world to protect their citizens from foreign interference, while protecting free speech, an example of which is being published[16] by the Washington and Lee Law Review.

There is no perfect approach, given the different cultural and legal traditions in play. But there’s plenty to learn and use to diminish outsiders’ ability to hack U.S. democracy.

Europe

The European Union has been a target of Russian efforts to undermine stability and trust in democratic institutions including elections across Europe.

Disinformation was rampant across Europe in 2019, including in the Netherlands[17] and the U.K., prompting the closing[18] of far-right Facebook groups for spreading “fake news and polarizing content.”

This has been repeated elsewhere in Europe, such as Spain, where Facebook – again under pressure[19] from the authorities and civil society groups – closed down far-right groups’ Facebook pages days ahead of their parliamentary elections in April 2019.

The disinformation efforts go beyond Facebook and manipulated Twitter feeds[20], when Twitter handles are renamed[21] by hackers to mislead followers. A growing aspect of multiple disinformation is how artificial intelligence can create manipulated videos that look real, which are called deepfakes[22].

Not all of this interference is foreign, though – political parties across Europe and around the world are learning disinformation tactics and are deploying it in their own countries to meet their own goals. Both the Labour and Conservative parties in the U.K. engaged[23] in these tactics in late 2019, for example.

In response, the EU is spending more[24] money on combating disinformation across the board by hiring new staff with expertise in data mining and analytics to respond to complaints and proactively detect disinformation. It is working to get member countries to share share information more readily, and has built a system that provides nations with real-time alerts of disinformation campaigns[25]. It is unclear if the U.K. will be participating[26] in these activities post-Brexit.

The EU also seems to be losing patience with Silicon Valley. It pressured social media giants like Facebook, Google and Twitter to sign the Code of Practice on Disinformation[27] in 2018. This initiative is the first time that the tech industry has agreed[28] “to self-regulatory standards to fight disinformation.” Among other provisions, the code requires signatories to cull fake accounts, and to report monthly on their efforts to increase transparency for political ads.

In response, these firms have set up[29] “searchable political-ad databases” and have begun to take down “disruptive, misleading or false” information from their platforms. But the code is not binding, and naming and shaming violators[30] does not guarantee better behavior in the future.

At the national level, France has taken a leading role[31] in taxing tech giants to reign in the power of tech firms including how they are used to spread disinformation, prompting threats of retaliatory tariffs from the Trump administration. But this may just be a “warmup[32]” to more ambitious actions designed to help protect both competition and democracy.

An Indian police officer inspects a damaged vehicle from which two men were taken and lynched by a mob afraid of strangers in their area because of misinformation spread on WhatsApp.

Biju Boro/AFP via Getty Images[33]

An Indian police officer inspects a damaged vehicle from which two men were taken and lynched by a mob afraid of strangers in their area because of misinformation spread on WhatsApp.

Biju Boro/AFP via Getty Images[33]

Asia

Democracies across Asia are also dealing with disinformation.

In Indonesia, for example, President Joko Widodo spearheaded the creation of the new National Cyber and Encryption Agency to combat disinformation[34] in their elections. One example was in June 2019, when a member of the Muslim Cyber Army was arrested in Java[35] for posting misinformation that implied that the Indonesian government was being controlled by China.

Like Indonesia, Malaysia has also criminalized[36] the sharing of misinformation. Myanmar[37] and Thailand have leaned on law enforcement actions by arresting people who they argue are behind disinformation campaigns to curtail misinformation, which have been abused in some cases to silence critics of public corruption[38].

The problem of disinformation in India is so severe that it has been likened by some commentators to a public health crisis[39]. One Microsoft study[40], for example, found that 64% of Indians encountered disinformation online in 2019, which was the highest proportion among 22 surveyed countries.

Not only have these incidents affected elections in India such as by spreading false information about candidates on WhatsApp, but they have led to real-world harm[41], including at least 33 deaths and 69 instances of mob violence following kidnapping allegations.

In response, the Indian government has shut down[42] the internet more than 100 times over the past year, and has proposed laws that would give it largely unchecked surveillance powers, mirroring Chinese-style internet censorship[43].

Australia and New Zealand

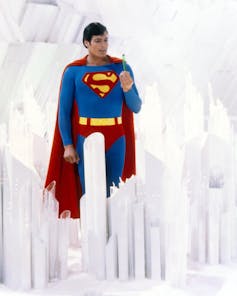

Superman is not coming to defend ‘truth, justice and the American way’ from disinformation.

Silver Screen Collection/Getty Images[44]

Superman is not coming to defend ‘truth, justice and the American way’ from disinformation.

Silver Screen Collection/Getty Images[44]

Australia and New Zealand have also been targets of online[45] influence campaigns[46] – not from Russia, but from China. In response, Australia has enacted a new law to ban foreign interference in Australia’s elections, but enforcement[47] has been lacking.

New Zealand has taken on a more global leadership role in combating this problem. In partnership with France, New Zealand’s Christchurch Call to Eliminate Terrorist and Violent Extremist Content Online has more than 50 nations[48] supporting its goal of stopping the spread of violent extremism online and banning foreign political donations[49]. Although not necessarily disinformation, such content can similarly widen fissures in democratic societies and disrupt elections.

Making cyberspace safe for democracy

Groups within the U.S. and outside it have long sought to exploit domestic divisions like inequality and injustice. This is a global issue, demanding action from both advanced and emerging democracies.

The U.S., for example, could take a wider view[50] of combating disinformation, featuring three parts.

First, more integration of disparate efforts is vital. That does not mean establishing an independent agency (as in Indonesia), for example, or focusing tenaciously on censorship and surveillance (as in India), but it could mean the current Federal Trade Commission and Justice Department investigations[51] into tech giants including Facebook should include disinformation as one focus.

Second, social media firms – including Facebook – could agree to comply by the EU Code for Disinformation globally, as some are already doing with the EU’s data privacy[52] regulations.

Third, media literacy and education is imperative to help inoculate citizens against disinformation. Educational reforms[53] are urgently needed to help students recognize disinformation when they see it, a topic all the more important given the rise of deepfakes[54].

In short, by working together and taking these threats seriously, we might even be able to find a way that – despite the challenges – democracy can persist even in a hyperconnected future.

[You need to understand the coronavirus pandemic, and we can help. Read our newsletter[55].]

References

- ^ shape local and national debates (www.ned.org)

- ^ used Facebook to intervene (www.washingtonpost.com)

- ^ history (www.nbcnews.com)

- ^ hoodwinking CBS News anchor Dan Rather (www.nytimes.com)

- ^ sparked the AIDS epidemic (www.latimes.com)

- ^ Russian interference in 27 elections (doi.org)

- ^ interfered (www.wired.com)

- ^ reaching more than 126 million Americans (www.theguardian.com)

- ^ almost certainly (www.washingtonpost.com)

- ^ already (www.washingtonpost.com)

- ^ doing so (www.washingtonpost.com)

- ^ 116 attempts to influence elections (doi.org)

- ^ react (www.poynter.org)

- ^ COVID-19 pandemic (www.theverge.com)

- ^ scholar (www.law.indiana.edu)

- ^ published (papers.ssrn.com)

- ^ Netherlands (www.cfr.org)

- ^ closing (www.bbc.com)

- ^ under pressure (www.theguardian.com)

- ^ manipulated Twitter feeds (www.nytimes.com)

- ^ renamed (www.nytimes.com)

- ^ deepfakes (www.cnn.com)

- ^ engaged (www.nytimes.com)

- ^ spending more (www.cfr.org)

- ^ real-time alerts of disinformation campaigns (theconversation.com)

- ^ participating (www.nytimes.com)

- ^ Code of Practice on Disinformation (europa.eu)

- ^ agreed (ec.europa.eu)

- ^ set up (europa.eu)

- ^ naming and shaming violators (www.nytimes.com)

- ^ leading role (www.wired.com)

- ^ warmup (www.wired.com)

- ^ Biju Boro/AFP via Getty Images (www.gettyimages.com)

- ^ combat disinformation (www.poynter.org)

- ^ Muslim Cyber Army was arrested in Java (www.scmp.com)

- ^ criminalized (www.poynter.org)

- ^ Myanmar (www.globalgroundmedia.com)

- ^ public corruption (www.poynter.org)

- ^ public health crisis (www.nytimes.com)

- ^ Microsoft study (news.microsoft.com)

- ^ real-world harm (www.business-standard.com)

- ^ shut down (scroll.in)

- ^ censorship (www.nytimes.com)

- ^ Silver Screen Collection/Getty Images (www.gettyimages.com)

- ^ targets of online (www.businessinsider.com)

- ^ influence campaigns (www.ft.com)

- ^ enforcement (www.nytimes.com)

- ^ more than 50 nations (www.christchurchcall.com)

- ^ donations (www.theguardian.com)

- ^ wider view (www.csis.org)

- ^ investigations (www.nytimes.com)

- ^ data privacy (iapp.org)

- ^ Educational reforms (securityintelligence.com)

- ^ deepfakes (www.csoonline.com)

- ^ Read our newsletter (theconversation.com)

Authors: Scott Shackelford, Associate Professor of Business Law and Ethics; Director, Ostrom Workshop Program on Cybersecurity and Internet Governance; Cybersecurity Program Chair, IU-Bloomington, Indiana University

Read more https://theconversation.com/the-battle-against-disinformation-is-global-129212