Rating news sources can help limit the spread of misinformation

- Written by Antino Kim, Assistant Professor of Operations and Decision Technologies, Indiana University

Online misinformation has significant real-life consequences, such as measles outbreaks[1] and encouraging racist mass murderers[2]. Online misinformation can have political consequences as well.

The problem of disinformation and propaganda misleading social media users was serious in 2016, continued unabated in 2018 and is expected to be even more severe in the coming 2020 election cycle[3] in the U.S.

Most people think they can detect deception[4] efforts online, but in our recent research, fewer than 20% of participants[5] were actually able to correctly identify intentionally misleading content. The rest did no better than they would have if they flipped a coin to decide what was real and what wasn’t.

Both psychological[6] and neurological[7] evidence shows that people are more likely to believe and pay attention to information that aligns with their political views – regardless of whether it’s true. They distrust and ignore posts that don’t line up with what they already think.

As information systems researchers, we wanted to find ways to help people discern true and false information – whether it confirmed what they previously thought or not, and even when it came from unknown sources. Fact-checking individual articles is a good start, but it can take days to do, so it usually isn’t fast enough to keep up with how quickly news travels[8].

We set out to discover the most effective way to present a source’s accuracy level to the public – that is, the way that would have the greatest effect on reducing the belief in, and spread of, disinformation.

Expert or user ratings?

One alternative is a source rating[9] based on past articles that gets attached to every new article as it is published, much like Amazon or eBay seller ratings.

The most useful ratings are those a person can use at the most relevant time – finding out about previous buyers’ experiences with a seller when considering making an online purchase, for instance.

When it comes to facts, though, there’s another wrinkle. E-commerce ratings are typically done by regular users, people with firsthand knowledge from using the item or service.

Fact-checking, on the other hand, has traditionally been done by experts like PolitiFact[10] because few people have the firsthand knowledge to rate news. By comparing user-generated ratings and expert-generated ratings, we’ve found that different rating mechanisms influence users in different ways[11].

We conducted two online experiments, with a total of 889 participants. Each person was shown a group of headlines, some labeled with accuracy ratings from experts, others labeled with ratings from other users and the remainder with no accuracy ratings at all.

We asked participants the extent to which they believed each headline and whether they would read the article, like it, comment or share it.

A sample headline with a rating from experts, as shown in our experiment.

Kim et al., CC BY-ND[12]

A sample headline with a rating from experts, as shown in our experiment.

Kim et al., CC BY-ND[12]

A sample headline with a rating from other users, as shown in our experiment.

Kim et al., CC BY-ND[13]

A sample headline with a rating from other users, as shown in our experiment.

Kim et al., CC BY-ND[13]

Expert ratings of news sources had stronger effects on belief than ratings from nonexpert users, and the effects were even stronger when the rating was low, suggesting the source was likely to be inaccurate. These low-rated inaccurate sources are the usual culprits in spreading disinformation, so our finding suggests that expert ratings are even more powerful when users need them most.

Respondents’ belief in a headline influenced the extent to which they would engage with it: The more they believed an article was true the more likely they were to read, like, comment on or share the article.

Those findings tell us that helping users mistrust inaccurate material at the moment they encounter it can help curb the spread of disinformation.

Spillover effects

We also found that applying source ratings to some headlines made our respondents more skeptical[14] of other headlines without ratings.

Facebook tried labeling headlines that were of dubious accuracy, but it didn’t help curb the spread of disinformation.

Kim et al.

Facebook tried labeling headlines that were of dubious accuracy, but it didn’t help curb the spread of disinformation.

Kim et al.

This finding surprised us because other methods of warning readers – such as attaching notices only to questionable headlines – have been found to cause users to be less skeptical of unlabeled headlines[15]. This difference is especially noteworthy since Facebook’s warning flag had little influence on the users and was eventually scrapped[16]. Perhaps, source ratings can deliver what Facebook’s flag couldn’t.

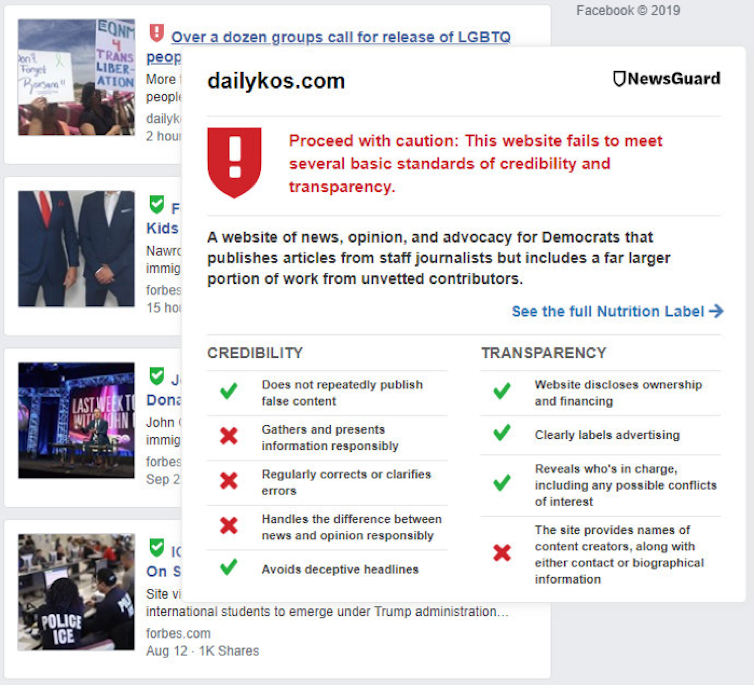

A NewsGuard rating warns Facebook users that the source may not be accurate or reliable.

Screenshot by Antino Kim

A NewsGuard rating warns Facebook users that the source may not be accurate or reliable.

Screenshot by Antino Kim

What we learned indicates that expert ratings provided by companies like NewsGuard[17] are likely more effective at reducing the spread of propaganda and disinformation than having users rate the reliability and accuracy[18] of news sources themselves. That makes sense, considering that, as we put it on Buzzfeed, “crowdsourcing ‘news’ was what got us into this mess in the first place[19].”

[ Expertise in your inbox. Sign up for The Conversation’s newsletter and get a digest of academic takes on today’s news, every day.[20] ]

References

- ^ measles outbreaks (www.cdc.gov)

- ^ encouraging racist mass murderers (www.nytimes.com)

- ^ even more severe in the coming 2020 election cycle (www.cnbc.com)

- ^ people think they can detect deception (www.journalism.org)

- ^ fewer than 20% of participants (misq.org)

- ^ psychological (doi.org)

- ^ neurological (misq.org)

- ^ it usually isn’t fast enough to keep up with how quickly news travels (apnews.com)

- ^ One alternative is a source rating (misq.org)

- ^ PolitiFact (www.politifact.com)

- ^ different rating mechanisms influence users in different ways (doi.org)

- ^ CC BY-ND (creativecommons.org)

- ^ CC BY-ND (creativecommons.org)

- ^ made our respondents more skeptical (doi.org)

- ^ less skeptical of unlabeled headlines (dx.doi.org)

- ^ Facebook’s warning flag had little influence on the users and was eventually scrapped (misq.org)

- ^ NewsGuard (www.newsguardtech.com)

- ^ having users rate the reliability and accuracy (www.facebook.com)

- ^ crowdsourcing ‘news’ was what got us into this mess in the first place (www.buzzfeednews.com)

- ^ Expertise in your inbox. Sign up for The Conversation’s newsletter and get a digest of academic takes on today’s news, every day. (theconversation.com)

Authors: Antino Kim, Assistant Professor of Operations and Decision Technologies, Indiana University

Read more http://theconversation.com/rating-news-sources-can-help-limit-the-spread-of-misinformation-126083