The everyday ethical challenges of self-driving cars

- Written by Johannes Himmelreich, Interdisciplinary Ethics Fellow, Stanford University McCoy Family Center for Ethics in Society

A lot of discussion[1] and ethical[2] thought[3] about self-driving cars have focused on tragic dilemmas[4], like hypotheticals in which a car has to decide whether to run over a group of schoolchildren or plunge off a cliff, killing its own occupants. But those sorts of situations are extreme cases.

As the most recent crash – in which a self-driving car killed a pedestrian[5] in Tempe, Arizona – demonstrates, the mundane, everyday situations at every crosswalk, turn and intersection present much harder and broader ethical quandaries.

Ethics of extremes

As a philosopher[6] working with engineers in Stanford’s Center for Automotive Research[7], I was initially surprised that we spent our lab meetings discussing what I thought was an easy question: How should a self-driving car approach a crosswalk?

My assumption had been that we would think about how a car should decide between the lives of its passengers and the lives of pedestrians. I knew how to think about such dilemmas because these crash scenarios resemble a famous philosophical brainteaser called the “trolley problem[8].” Imagine a runaway trolley is hurling down the tracks and is bound to hit either a group of five or a single person – would you kill one to save five?

However, many[9] philosophers[10] nowadays doubt that investigating such questions is a fruitful avenue of research. Barbara Fried[11], a colleague at Stanford, for example, has argued that tragic dilemmas make people believe ethical quandaries[12] mostly arise in extreme and dire circumstances.

In fact, ethical quandaries are ubiquitous. Everyday, mundane situations are surprisingly messy and complex, often in subtle ways. For example: Should your city spend money on a diabetes prevention program or on more social workers? Should your local Department of Public Health hire another inspector for restaurant hygiene standards, or continue a program providing free needles and injection supplies?

These questions are extremely difficult to answer because of uncertainties about the consequences – such as who will be affected and to what degree. The solutions philosophers have proposed for extreme and desperate situations are of little help here.

The problem is similar with self-driving cars. Thinking through extreme situations and crash scenarios cannot help answer questions that arise in mundane situations.

A challenge at crosswalks

One could ask, what can be so hard about mundane traffic situations like approaching a crosswalk, driving through an intersection, or making a left turn. Even if visibility at the crosswalk is limited and it is sometimes hard to tell whether a nearby pedestrian actually wants to cross the street, drivers cope with this every day.

But for self-driving cars, such mundane situations pose a challenge in two ways.

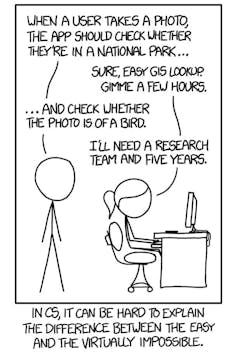

Easy for humans often means hard for computers.

XKCD, CC BY-SA[13][14]

Easy for humans often means hard for computers.

XKCD, CC BY-SA[13][14]

First, there is the fact that what is easy for humans is often hard for machines[15]. Whether it is recognizing faces or riding bicycles, we are good at perception and mechanical tasks because evolution built these skills for us. That, however, makes these skills hard to teach or engineer. This is known as “Moravec’s Paradox[16].”

Second, in a future where all cars are self-driving cars, small changes to driving behavior would make a big difference in the aggregate. Decisions made by engineers today, in other words, will determine not how one car drives but how all cars drive. Algorithms become policy.

Engineers teach computers how to recognize faces and objects using methods of machine learning. They can use machine learning also to help self-driving cars[17] imitate how humans drive. But this isn’t a solution: It doesn’t solve the problem that wide-ranging decisions about safety and mobility are made by engineers.

Furthermore, self-driving cars shouldn’t drive like people. Humans aren’t actually very good drivers[18]. And they drive in ethically troubling ways, deciding whether to yield at crosswalks, based on pedestrians’ age[19], race[20] and income[21]. For example, researchers in Portland[22] have found that black pedestrians are passed by twice as many cars and had to wait a third longer than white pedestrians before they can cross.

Self-driving cars should drive more safely, and more fairly than people do.

Mundane ethics

The ethical problems deepen when you attend to the conflicts of interest that surface in mundane situations such as crosswalks, turns and intersections.

For example, the design of self-driving cars needs to balance the safety of others – pedestrians or cyclists – with the interests of cars’ passengers. As soon as a car goes faster than walking pace, it is unable to prevent from crashing into a child that might run onto the road in the last second. But walking pace is, of course, way too slow. Everyone needs to get to places. So how should engineers strike the balance between safety and mobility? And what speed is safe enough?

There are other ethical questions that come up as well. Engineers need to make trade-offs between mobility and environmental impacts[23]. When they’re applied across all the cars in the country, small changes in computer-controlled acceleration, cornering and braking can have huge effects on energy use and pollution emissions. How should engineers trade off travel efficiency with environmental impact?

What should the future of traffic be?

Mundane situations pose novel engineering and ethical problems, but they also lead people to question basic assumptions of the traffic system.

For myself, I began to question whether we need places called “crosswalks” at all? After all, self-driving cars can potentially make it safe to cross a road anywhere.

And it is not only crosswalks that become unnecessary. Traffic lights at intersections could be a thing of the past as well. Humans need traffic lights to make sure everyone gets to cross the intersection without crash and chaos. But self-driving cars could coordinate among themselves[24] smoothly.

Traffic control for the future.The bigger question here is this: Given that self-driving cars are better than human drivers, why should the cars be subject to rules that were designed for human fallibility and human errors? And to extend this thought experiment, consider also the more general question: If we, as a society, could design our traffic system from scratch, what would we want it to look like?

Because these hard questions concern everyone in a city or in a society, they require a city or society to agree on answers. That means balancing competing interests in a way that works for everybody – whether people think only about crosswalks or about the traffic system as a whole.

With self-driving cars, societies can redesign their traffic systems[25]. From the crosswalk to overall traffic design – it is mundane situations that raise really hard questions. Extreme situations are a distraction.

The trolley problem does not answer these hard questions.

References

- ^ discussion (www.washingtonpost.com)

- ^ ethical (doi.org)

- ^ thought (doi.org)

- ^ tragic dilemmas (www.newyorker.com)

- ^ self-driving car killed a pedestrian (www.nytimes.com)

- ^ philosopher (scholar.google.com)

- ^ Stanford’s Center for Automotive Research (cars.stanford.edu)

- ^ trolley problem (theconversation.com)

- ^ many (doi.org)

- ^ philosophers (doi.org)

- ^ Barbara Fried (law.stanford.edu)

- ^ tragic dilemmas make people believe ethical quandaries (doi.org)

- ^ XKCD (xkcd.com)

- ^ CC BY-SA (creativecommons.org)

- ^ hard for machines (en.wikipedia.org)

- ^ Moravec’s Paradox (www.hup.harvard.edu)

- ^ help self-driving cars (theconversation.com)

- ^ Humans aren’t actually very good drivers (www.nhtsa.gov)

- ^ age (doi.org)

- ^ race (doi.org)

- ^ income (doi.org)

- ^ researchers in Portland (nitc.trec.pdx.edu)

- ^ environmental impacts (doi.org)

- ^ themselves (youtu.be)

- ^ redesign their traffic systems (nacto.org)

Authors: Johannes Himmelreich, Interdisciplinary Ethics Fellow, Stanford University McCoy Family Center for Ethics in Society

Read more http://theconversation.com/the-everyday-ethical-challenges-of-self-driving-cars-92710